The Evolution of ChatGPT: From Plugins to Custom GPTs

Discover how ChatGPT plugins enhance AI capabilities, from web browsing to data analysis. Learn about the evolution to Custom GPTs and their impact on productivity and efficiency.

The advent of large language models (LLMs) has profoundly reshaped the landscape of artificial intelligence, with OpenAI's ChatGPT emerging as a pivotal force since its introduction in November 2022. Initially powered by the GPT-3.5 model, ChatGPT demonstrated remarkable capabilities in understanding and generating human-like text, rapidly capturing widespread interest. However, a fundamental characteristic of these foundational LLMs is that their knowledge is inherently "frozen" at the point of their last training data cutoff. This inherent limitation meant that ChatGPT, despite its conversational prowess, could not natively access real-time information, interact with external services, or perform actions in the real world. This architectural constraint created an immediate and compelling demand for mechanisms that could extend its utility beyond mere text generation.

The strategic imperative for OpenAI became clear: to transform ChatGPT from a static conversational agent into a dynamic, interactive platform capable of addressing real-world tasks. This vision encompassed functionalities such as reading web links, generating videos, searching for flights, or facilitating online purchases, aiming to establish ChatGPT as a versatile productivity tool. Both ChatGPT Plugins and the subsequent Custom GPTs represent OpenAI's iterative and evolving solutions to this extensibility challenge. These mechanisms are critical enablers for streamlining workflows, enhancing user experiences, and automating complex tasks across a diverse array of domains. The progression from plugins to Custom GPTs signifies a deliberate and strategic refinement in OpenAI's approach to AI customization, driven by the pursuit of greater flexibility, simplified development, and the cultivation of a more robust and expansive application ecosystem.

A critical observation underpinning this evolution is the understanding that the inherent limitations of foundational LLMs serve as a primary catalyst for extensibility. The core capacity of an LLM to process and generate language, while powerful, is constrained by its training data and its inability to directly interface with dynamic external environments. This means that for LLMs to achieve practical utility beyond isolated conversational tasks, they must be equipped with robust interfaces to external data sources and actionable tools. Without such extensibility mechanisms, the practical application of a sophisticated LLM like ChatGPT would remain severely limited, particularly for dynamic, real-time, or task-oriented scenarios. This highlights a fundamental architectural challenge in deploying LLMs: they function as powerful reasoning engines, but they necessitate effective "senses" (for data input) and "limbs" (for performing actions) to meaningfully interact with the real world. Plugins and Custom GPTs are OpenAI's strategic responses to providing these essential capabilities, thereby unlocking broader applications for their AI models.

II. The Era of ChatGPT Plugins (March 2023 - April 2024)

The initial foray into extending ChatGPT's capabilities beyond its core conversational model was marked by the introduction of ChatGPT Plugins. These optional software components were designed to significantly augment the platform's functionality and versatility. Announced by OpenAI in March 2023, plugins enabled ChatGPT to interact with external services and APIs, allowing it to perform tasks that transcended its inherent text generation abilities. This development represented a crucial step in transforming ChatGPT from a passive knowledge base into an active, actionable tool.

Concept and Core Functionality of ChatGPT Plugins

Plugins were categorized into several main types, each designed to address specific needs. Third-Party Plugins were the most accessible to general consumers, facilitating interactions with a wide array of external applications. Notable examples included the Zapier Plugin, which allowed users to interact with over 5,000 apps like Google Sheets, Gmail, and Slack directly from the ChatGPT interface, enabling task automation. The Instacart Plugin combined ChatGPT's capabilities with Instacart's AI, allowing users to order groceries within their chats, while the Visla Plugin could generate quick videos from stock footage, hinting at AI's potential in video editing. The Zillow Plugin provided property listings based on user specifics, and the Link Reader Plugin was widely used for summarizing online content directly within ChatGPT.

Beyond third-party integrations, Web Browsing Plugins were introduced to enable ChatGPT to read and summarize online content, directly addressing the limitation of the base model's knowledge cutoff. Code Interpreter Plugins empowered ChatGPT to perform programming tasks, including code generation, debugging, and data analysis, making it a valuable asset for developers and data professionals. Lastly, Retrieval Plugins offered users the ability to access personal or organizational data sources such as files, notes, and emails through natural language queries. Access to these plugins was exclusive to ChatGPT Plus subscribers, requiring manual activation within the "Beta Features" settings, with a limitation of enabling up to three plugins concurrently per chat session.

Benefits and Early Impact

The introduction of plugins yielded significant benefits, primarily in enhancing productivity and automation. They streamlined workflows by automating repetitive processes, facilitating real-time data analysis, and generally improving efficiency across various sectors. In industries like PR and marketing, plugins promised to automate tasks, improve content creation, and provide real-time data access, thereby augmenting overall efficiency, creativity, and effectiveness.

Plugins also markedly expanded ChatGPT's use cases. In web design, for instance, ChatGPT could assist with generating HTML and CSS code, debugging, and creating metadata for search engine optimization (SEO). In financial services, they enabled the retrieval of real-time information like stock prices and the analysis of banking data. Tools such as AskYourPDF allowed users to interact with and extract information from PDF documents. For software developers, plugins proved invaluable in automating testing processes, improving debugging efficiency, generating comprehensive documentation, and assisting with code generation and refactoring.

Technical Architecture and Developer Experience

From a technical standpoint, plugins were designed to extend ChatGPT's capabilities by enabling it to interact with external services via Application Programming Interfaces (APIs). The development process for a ChatGPT plugin was structured, involving several distinct steps. Developers first needed to build an application encapsulated within an API. This was followed by the creation of a standardized manifest file and an OpenAPI specification. These specifications were crucial as they precisely defined the plugin's functionality, allowing ChatGPT to interpret and make calls to the APIs defined by the developer. This process, while powerful, inherently required a degree of technical expertise in API design and adherence to specific documentation standards.

Limitations and Challenges

Despite their initial promise, ChatGPT plugins faced significant limitations and challenges that ultimately contributed to their deprecation.

User Experience Friction: A primary drawback was the cumbersome user experience. Users were restricted to enabling a maximum of three plugins simultaneously, and the process of swapping out plugins often necessitated starting a new chat session, leading to the loss of prior discussion history. This limitation severely hampered complex workflows that required dynamic interaction with multiple tools. Furthermore, the plugin store, with nearly 700 available plugins, suffered from a lack of curation. This made discovery difficult and resulted in a wide variability in quality, with many plugins being "rudimentary and experimental" or merely serving as branding exercises that offered little functional value. Common troubleshooting issues, such as plugins not appearing or loading, required users to manually verify subscriptions, enable beta features, clear browser caches, or even disable VPNs, indicating a less-than-seamless operational experience.

Quality Variability and Inconsistency: The inconsistent quality of plugins posed a significant challenge. The wide spectrum from highly advanced to basic and untested offerings made it difficult for users to anticipate a uniform and predictable experience. Even with the ability to connect to external data sources, ChatGPT, when utilizing plugins, still exhibited tendencies to "hallucinate" or generate factually inaccurate information, particularly when integrating with less reliable data sources. The model also struggled with nuanced understanding, maintaining context over lengthy dialogues, and performing complex multi-step reasoning, leading to generic or repetitive responses.

Security and Data Privacy Concerns: A critical vulnerability stemmed from the plugins' inherent dependence on external APIs. Any downtime or performance issues with these third-party APIs directly impacted the functionality of the associated plugins, leading to unreliable service. More alarmingly, security researchers uncovered critical flaws within the plugin ecosystem. These included vulnerabilities in the plugin installation process itself, which could allow attackers to inject malicious plugins and potentially intercept sensitive user messages. Flaws were also found within PluginLab, a framework used for developing ChatGPT plugins, which could lead to account takeovers on third-party platforms like GitHub. Additionally, OAuth redirection manipulation vulnerabilities were identified in several plugins, enabling attackers to steal user credentials and execute account takeovers. The requirement for plugins to access personal or sensitive data raised significant privacy concerns, as the security measures of third-party developers were not always robust. Cross-site scripting (XSS) and other vulnerabilities further exposed affected sites to malicious script injections. The overall risk of proprietary information theft and sensitive user data leakage (including Personally Identifiable Information, PII) was substantial.

The challenges encountered during the plugin era highlight several critical dynamics. First, the open and largely uncurated nature of the plugin marketplace created a "Wild West" environment. This led to a proliferation of low-quality or non-functional plugins, which in turn degraded the overall user experience, eroded trust in the system, and made it challenging for users to identify genuinely valuable tools. This situation likely deterred developers from investing significant resources in high-quality offerings if their efforts were overshadowed by a flood of inferior alternatives. Such quality control issues signaled to OpenAI that a more managed ecosystem was essential for long-term success and widespread adoption.

Second, the design of plugins revealed an inherent tension between openness and the imperative for security and control. By allowing broad integration with external APIs and third-party services, OpenAI maximized extensibility. However, this openness directly exposed the platform to significant security vulnerabilities, including data leakage and account takeovers. This presented OpenAI with a dilemma: prioritize expansive functionality through external integrations or tighten security and control, potentially limiting the scope of applications. The prevalence of these security flaws strongly suggests that the initial plugin architecture, while innovative, leaned towards openness, possibly at the expense of robust security. This tension is a recurring theme in platform development, particularly for AI, where balancing the power of external integrations with the critical need for data security and user trust is paramount. The subsequent pivot to Custom GPTs indicates a strategic move towards greater internal control and integration within OpenAI's ecosystem to mitigate these inherent risks.

Finally, the cumulative effect of the cumbersome user experience, including the restrictive three-plugin limit and the necessity to restart chat sessions to swap plugins, acted as a significant bottleneck for adoption. Even with powerful underlying technology, a poor user experience inevitably hinders widespread engagement and sustained use. Users are generally unwilling to tolerate clunky or inefficient workflows for tasks they perform regularly. This underscores the critical importance of human-centered design in the development of AI products, as the friction in the plugin experience directly contributed to user frustration and played a substantial role in OpenAI's decision to pursue a more streamlined and integrated solution.

III. The Strategic Pivot to Custom GPTs (November 2023 - Present)

The challenges and limitations of the plugin ecosystem prompted a significant strategic pivot by OpenAI, leading to the introduction and rapid adoption of Custom GPTs. This transition marked a new era in AI customization, emphasizing greater flexibility, ease of development, and a more integrated application environment.

OpenAI's Rationale for Phasing Out Plugins

OpenAI officially announced the winding down of plugin support in February 2024. The ability to initiate new conversations via plugins ceased on March 19, 2024, with all existing plugin-based chats being shut down by April 9, 2024. This decisive and swift deprecation signaled a fundamental shift in OpenAI's extensibility strategy.

The primary motivation behind this pivot was to address the shortcomings and limitations inherent in the plugin architecture. OpenAI explicitly stated that Custom GPTs offer "far greater flexibility" and represent a "better way to reach ChatGPT users". Key improvements and rationales included:

Ease of Development: A major driving factor was the significantly lower barrier to entry for creators. Developers found it "much easier to build GPTs than plugins" , largely due to the introduction of the "GPT Builder" which facilitates a "no-code setup using natural language". This democratization of AI creation enabled a broader audience to develop specialized AI tools.

Enhanced Functionality & Integration: Custom GPTs were designed to "replicate and surpass plugin functionality" through the use of "actions". Crucially, they integrated core capabilities such as Code Interpreter, Web Browsing, and DALL·E directly into the platform. This internal integration reduced reliance on external, often inconsistent, third-party plugins for fundamental functionalities.

Improved User Experience: The ability for users to seamlessly switch between distinct, specialized Custom GPTs for different tasks was perceived as "way quicker and more intuitive" compared to the manual enabling and disabling of plugins within a single conversation. This addressed a significant point of user friction from the plugin era.

Scalability and Discovery: The launch of the GPT Store in January 2024 dramatically improved the discoverability of specialized AI tools. This centralized marketplace offered a stark contrast to the uncurated and often difficult-to-navigate plugin marketplace.

Introduction to Custom GPTs

Custom GPTs represent a paradigm shift in how users interact with and customize AI. At their core, Custom GPTs are specialized AI models meticulously tailored for specific domains, contexts, or tasks. Unlike the general-purpose ChatGPT, these custom versions are configured with explicit instructions and can maintain a proprietary knowledge base that extends beyond OpenAI's default training data. This foundational capability empowers users to create highly focused AI assistants designed to address very specific needs, whether for personal, professional, or business applications.

A defining characteristic of Custom GPTs is their "no-code" creation process. This innovation allows creators to define the desired functionality of their GPTs simply by describing their vision to the GPT Builder in natural language. The system then guides them through the setup process, eliminating the need for traditional programming skills. This approach significantly democratizes AI development, making it accessible to a much broader audience, including domain experts, small business owners, and individuals without a technical background. Furthermore, Custom GPTs offer extensive personalization capabilities. They can be precisely tailored to reflect specific tones, communication styles, or brand preferences, making interactions more personalized and engaging for the end-user.

Key Features and Enhanced Capabilities

Custom GPTs boast a suite of enhanced features and capabilities that significantly surpass their plugin predecessors:

Built-in Tools: A major improvement is the native integration of powerful tools directly within the Custom GPT framework. These capabilities, which previously required external plugins or separate features, now include:

Web Browsing: This allows the Custom GPT to access real-time information from the internet, effectively overcoming the knowledge cutoff limitation inherent in the base LLM.

Code Interpreter: This feature enables the execution of Python code, facilitates data analysis, and assists with debugging directly within the conversational interface.

DALL·E: Custom GPTs can leverage DALL·E for image generation directly from text prompts, supporting a wide range of creative tasks.

"Actions" for External API Integration: Custom GPTs introduce "Actions" as the mechanism for calling external APIs, functionally similar to how plugins operated. Developers define these API endpoints using OpenAPI schemas (in JSON or YAML format) and configure appropriate authentication methods. This enables GPTs to fetch dynamic data, trigger workflows, or send updates to external tools, facilitating real-world tasks such as booking meetings, sending emails, or querying customer relationship management (CRM) data.

Improved Memory and Context Handling: Custom GPTs are designed with more comprehensive memory capabilities. They can maintain a persistent knowledge base through the direct upload of various documents, including PDFs, text files, and markdown files. This allows them to provide more accurate and contextually relevant responses by drawing upon proprietary or domain-specific data. The architecture is also tailored to handle longer conversational contexts, leading to "smarter responses" that build upon previous interactions.

Multi-Model Support: A recent enhancement allows creators to select from a full suite of ChatGPT models, including GPT-4o, GPT-3.5, and Mini versions, when constructing Custom GPTs. This capability enables fine-tuning of performance for highly specific tasks, industries, and workflows, optimizing the AI's behavior for its intended purpose.

Versatility and Scalability: Custom GPTs are engineered for versatility, capable of addressing a broad spectrum of tasks without consistently relying on external data sources or APIs, thereby reducing third-party service dependencies. They are also inherently scalable, designed to handle increased traffic and capable of deployment across multiple platforms.

The GPT Store: A New Ecosystem

The launch of the GPT Store in January 2024 marked a significant milestone, establishing a marketplace akin to Apple's App Store, where users can discover, utilize, and share custom versions of ChatGPT. This platform serves as a centralized hub for a vast array of specialized AI applications developed by both OpenAI and its burgeoning community of creators.

The impact on developer adoption has been profound. The simplified creation process, combined with the enhanced discoverability offered by the GPT Store, led to an explosive growth in the ecosystem. Within a short period, hundreds of thousands of Custom GPTs became available, a dramatic increase compared to the mere 1,000 or so plugins at their peak. This rapid expansion unequivocally demonstrated a clear preference among the developer community for the Custom GPT framework.

To further incentivize participation and foster a vibrant community, OpenAI introduced a planned revenue-sharing program, designed to reward creators based on the user engagement their GPTs generate. This financial incentive transforms the creation of Custom GPTs from a hobby into a potential business opportunity, aiming to cultivate a continuous supply of innovative and useful AI applications. For ChatGPT Teams and Enterprise users, the GPT Store also provides enhanced administrative controls, enabling secure sharing of internal-only GPTs and granular management over which external GPTs can be utilized within their organizations.

This strategic pivot to Custom GPTs and the establishment of the GPT Store reveal several key underlying dynamics. First, there is a clear shift from a "tool integration" approach to one of "AI specialization." While plugins primarily focused on connecting ChatGPT to existing external tools , Custom GPTs place a strong emphasis on configuring ChatGPT itself with "custom instructions," building "knowledge bases," and leveraging "domain-specific data". This signifies a strategic move from merely extending ChatGPT's reach to external services to enabling ChatGPT to become a highly specialized AI agent for particular tasks or domains. The focus has evolved from what tools ChatGPT could use to what specialized AI agent ChatGPT could embody. This indicates OpenAI's long-term vision is not just a general-purpose chatbot, but a collection of finely tuned, intelligent agents, each optimized for specific professional or personal needs, moving beyond simple automation to deep domain expertise.

Second, the democratization of AI creation is a deliberate and potent growth strategy. The emphasis on a "no-code" creation process for Custom GPTs and the marketplace model of the GPT Store are central to this. OpenAI recognized that the technical barriers of traditional plugin development limited adoption. By lowering this barrier and making AI creation accessible to non-programmers, they dramatically expanded the potential creator base, leading to the rapid proliferation of Custom GPTs. This strategic choice directly addresses the limitation of a smaller developer community for plugins, fostering a much larger and more diverse ecosystem of AI applications. This is a clear demonstration that ease of development is a critical factor for platform growth in the rapidly evolving AI space.

Third, the integration of monetization as a community catalyst is a crucial element of this strategy. The planned revenue-sharing program within the GPT Store provides a significant financial incentive for creators. This transforms the development of Custom GPTs from a purely experimental or hobbyist endeavor into a potential business opportunity, thereby attracting and retaining high-quality developers. By aligning developer incentives with user engagement, OpenAI aims to cultivate a self-sustaining ecosystem, akin to the successful models of existing app stores. This strategic move is designed to ensure a continuous supply of innovative and useful Custom GPTs, which in turn drives further platform adoption and solidifies OpenAI's position at the forefront of the AI application layer.

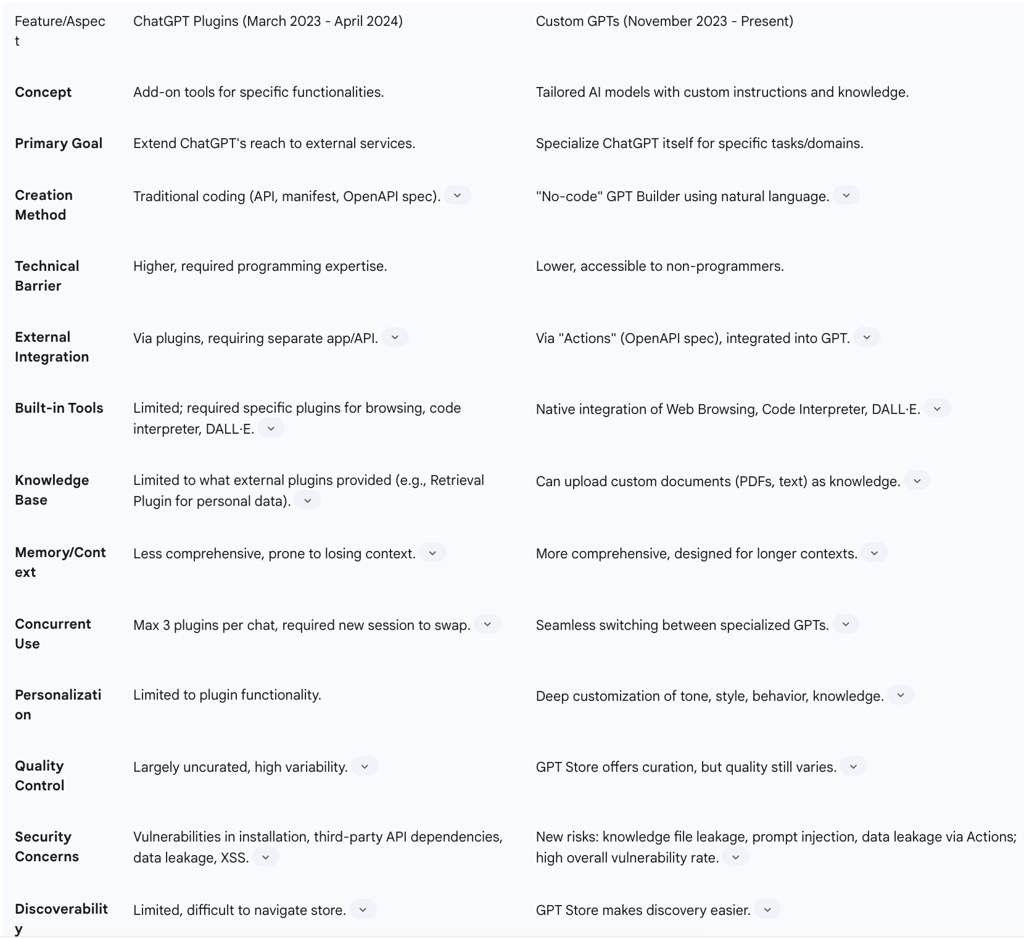

IV. Comparative Analysis: Custom GPTs vs. Plugins

The transition from ChatGPT plugins to Custom GPTs represents a significant architectural and strategic evolution. A detailed comparative analysis across key dimensions reveals how Custom GPTs aim to address the shortcomings of their predecessors and their broader implications for the AI ecosystem.

User Experience and Personalization

The user experience (UX) was a critical differentiator. Plugins required users to manually enable or disable them and imposed a strict limitation of three active plugins per chat session. This often forced users to initiate new chat sessions to swap out plugins, resulting in a fragmented and cumbersome workflow that disrupted the conversational flow and led to a loss of discussion history. Personalization was largely confined to the specific functionalities offered by individual plugins, with limited ability to tailor the AI's overall behavior or persona.

In contrast, Custom GPTs offer a significantly more seamless and intuitive experience. Users can effortlessly switch between distinct, specialized GPTs for different tasks, effectively eliminating the need to manage multiple tools within a single conversation. This shift facilitates a deeper level of personalization through the integration of custom instructions, the ability to upload proprietary knowledge files, and the definition of predefined behaviors. This enables the AI to adopt specific tones, styles, and embody domain-specific expertise, leading to interactions that are more personalized and engaging. The outcome is a more accurate, relevant, and efficient AI interaction tailored to individual or organizational needs.

This evolution in user experience can be understood as a fundamental shift from a "toolbox" approach to a "specialist assistant" paradigm. Plugins functioned much like a collection of disparate tools that users had to manually select and combine for specific tasks. This placed the burden of orchestration and workflow management squarely on the user. Custom GPTs, conversely, are presented as "tailor-made AI assistants" or "specialists". This represents a profound change in the user interaction model. Instead of users needing to actively orchestrate multiple tools, the Custom GPT itself is pre-configured and optimized to handle a specific domain or task. This inherent specialization simplifies the user's mental model of interaction, significantly reducing cognitive load. This move aligns with a broader trend in AI development towards more autonomous and intelligent agents that can comprehend complex contexts and execute multi-step tasks without explicit, granular human guidance. It elevates the perception of AI from a reactive tool to a proactive, domain-aware assistant.

Developer Workflow and Adoption

The developer workflow for plugins involved a more traditional software development lifecycle. Creators were required to build a separate application with its own API, along with a standardized manifest file and an OpenAPI specification for integration with ChatGPT. While this approach offered substantial benefits for developers in terms of automating testing, debugging, and code generation , it inherently posed a higher technical barrier to entry. Consequently, the plugin marketplace remained relatively limited, peaking at approximately 1,000 offerings.

Custom GPTs, by contrast, significantly lower this technical barrier through their "no-code" GPT Builder. This interface allows creators to define the desired functionality of their AI assistant using natural language prompts. Developers can directly upload knowledge files (e.g., PDFs, text, markdown) to imbue the GPT with specific information. While integrating external APIs via "Actions" still requires the use of OpenAPI specifications and can be technically involved , the overall ease of creation for basic to moderately complex GPTs has been a game-changer. This simplified workflow has led to an explosion in the number of available GPTs, reaching "hundreds of thousands" , unequivocally demonstrating an overwhelming preference among the developer community for this new paradigm.

The overwhelming success of Custom GPTs in terms of developer adoption underscores the "no-code" imperative for mass adoption in the AI space. The shift from a traditional coding requirement for plugins to a natural language interface for Custom GPTs dramatically expanded the pool of potential creators. OpenAI recognized that developer adoption scales exponentially when the technical barrier to entry is lowered. The "no-code" approach democratizes AI creation, enabling domain experts, small businesses, and individuals without programming skills to build custom solutions tailored to their specific needs. This strategic move directly addresses the limitation of a smaller, more specialized developer base for plugins, leading to a much larger and more diverse ecosystem of AI applications. It serves as a clear demonstration that ease of development is a critical factor for platform growth and innovation in the rapidly evolving AI landscape.

However, it is important to acknowledge an enduring challenge related to complex API integration. While Custom GPTs are largely "no-code" for their foundational setup and knowledge ingestion, the integration of external APIs via "Actions" still necessitates the writing and maintenance of OpenAPI specifications. Some observations suggest that "GPT actions didn't stick" for many users precisely because they remained "too technical" for the non-niche audience. This reveals a potential contradiction within the overarching "no-code" promise. While basic customization is now highly accessible, achieving advanced integrations with external systems for complex automations continues to demand a degree of technical expertise. This suggests that while OpenAI has successfully democratized basic AI creation, the frontier of sophisticated, deeply integrated AI solutions might still have a ceiling for truly non-technical users. This ongoing challenge pushes the boundaries for future AI development tools, highlighting an area where further simplification or abstraction may be required.

Technical Architecture and Integration

The technical architecture of plugins primarily relied on ChatGPT acting as an orchestrator for external API calls. This system allowed for multiple plugins to interact within a single conversation, a feature that could be "powerful and very useful". However, this "mix-and-match" functionality also proved to be "unpredictable and uncontrollable" , leading to concerns about data sharing among various plugins and potential "security holes".

Custom GPTs represent a more integrated architectural approach. Core capabilities such as web browsing, code interpretation, and DALL·E are now built directly into the model. This internalizes frequently used functionalities, providing OpenAI with greater control over their performance, reliability, and security, and reducing dependency on external third-party developers for these core features. "Actions" within Custom GPTs still enable calls to external APIs, mirroring plugin functionality through OpenAPI specifications. While the initial design of Custom GPTs limited the ability to combine "Actions" from different authors in a single interaction , this capability has seen some evolution. Furthermore, memory handling in Custom GPTs is designed to be more comprehensive , allowing for superior context retention compared to plugins, which sometimes struggled with maintaining conversational context over time.

The centralization of core tools within Custom GPTs for stability and performance is a significant architectural decision. By integrating functionalities like browsing, Code Interpreter, and DALL·E as built-in capabilities, OpenAI has gained enhanced control over their performance, reliability, and security. This strategic move reduces dependency on third-party developers for these foundational features, leading to a more stable and efficient user experience. This decision is a direct response to the quality variability and performance inconsistencies observed with external plugins, signifying a deliberate effort to ensure that core capabilities are robust and seamlessly integrated, thereby providing a more consistent and predictable user experience.

However, the challenge of multi-tool orchestration continues to evolve. While plugins allowed a "mix-and-match" functionality that, despite its power, often resulted in "unpredictable and uncontrollable" behavior and security concerns , Custom GPTs initially imposed limitations on combining actions from different authors. This indicates that OpenAI is actively grappling with the inherent complexity of enabling multiple AI tools or services to work in seamless tandem. While such orchestration holds immense potential, it introduces significant challenges in maintaining conversational coherence, ensuring robust security, and achieving predictable outcomes. This represents a cutting-edge problem in AI development: how to facilitate complex, multi-agent or multi-tool workflows both safely and effectively. The architectural evolution from plugins to Custom GPTs suggests a more controlled and integrated approach to this problem, potentially paving the way for future advancements in "meta-GPTs" or sophisticated AI agents capable of intelligently chaining disparate actions.

Security Model Evolution

Security was a major concern during the plugin era. Plugins were susceptible to significant flaws, including vulnerabilities in their installation process, framework-level issues (e.g., PluginLab), OAuth redirection manipulation, and cross-site scripting (XSS) vulnerabilities. These vulnerabilities presented substantial risks, including account takeovers, data theft, and the unauthorized leakage of proprietary information.

While Custom GPTs were designed with improved security in mind, new and evolving concerns have emerged. The ability to upload knowledge files introduces a risk of accidental leakage of sensitive data if the Custom GPT is subsequently published or shared. Furthermore, "Actions" within Custom GPTs can still lead to data leakage to third-party APIs and remain susceptible to indirect prompt injections. Recent research indicates a concerning statistic: over 95% of Custom GPTs currently lack adequate security protections. This vulnerability often stems from weaknesses inherited or amplified from OpenAI's foundational models. Specific prevalent vulnerabilities include susceptibility to roleplay-based attacks, system prompt leakage, and phishing attempts.

This landscape indicates a shifting security paradigm rather than a complete elimination of risk. While some plugin-specific vulnerabilities may have been mitigated, Custom GPTs introduce new attack vectors, particularly related to the handling of "knowledge" files and the interaction with external APIs via "Actions". This implies a continuous and dynamic challenge in AI security, akin to a cat-and-mouse game between developers and malicious actors. A critical aspect of this evolving security model is the increased responsibility placed on the user or creator. The security posture of Custom GPTs heavily relies on the creator's understanding and implementation of robust security best practices, particularly concerning the data uploaded to the GPT and the configuration of its "Actions". The high reported vulnerability rate suggests a significant gap in user awareness, available security tools, or both. Furthermore, the finding that "OpenAI's foundational models exhibit inherent security weaknesses, which are often inherited or amplified in custom GPTs" points to a deeper, underlying issue that impacts all derived applications built on these models, necessitating continuous vigilance and improvements at the platform level.

Market Impact and Ecosystem Dynamics

The plugin ecosystem, while innovative, created a nascent marketplace with just over 1,000 offerings. However, its growth and broader market impact were hindered by significant quality variability and limited discoverability.

The introduction of Custom GPTs and the GPT Store has dramatically transformed the market dynamics. The ecosystem has rapidly expanded to encompass "hundreds of thousands" of GPTs. This democratization of AI application development has allowed a far wider range of users to create and share specialized AI tools, fostering an explosion of innovation and new use cases across diverse industries, including marketing, sales, finance, HR, and business strategy. The planned revenue-sharing program further incentivizes community participation and provides a pathway for monetization, aligning creator interests with platform growth.

The strategic decision to adopt an "App Store" model serves as a powerful ecosystem accelerator. The GPT Store explicitly draws parallels with established marketplaces like Apple's App Store and Google's Play Store. Its launch directly led to a massive increase in the number of available GPTs compared to the previous plugin model. This demonstrates OpenAI's strategic leverage of a proven ecosystem model to scale AI application development. By providing a centralized marketplace, user-friendly creation tools, and compelling monetization opportunities, OpenAI is generating a powerful flywheel effect for innovation and adoption. This signifies a maturation of the AI application layer, moving from experimental integrations to a more structured, market-driven development paradigm. It strategically positions OpenAI not merely as a model provider but as a comprehensive platform provider for AI solutions, with the potential to disrupt traditional software markets by enabling the rapid creation and deployment of highly specialized, AI-native applications.